[From the last episode: We looked at pipelinesA way of speeding up a repeated task by breaking it up into stages and having different resources do each stage. It takes longer for the first task to be completed (that’s the latency); the others come out in quick succession after the first one. and their role in computers]

A friend visits you from afar, and you offer your guest bedroom for them to stay in. They sleep through the night, and you’re in the kitchen when they arise. You hear them say, “Good m…” Now, by that, I don’t mean that they pause their speech there. I mean that you hear that part first. And, given the context, your brain jumps way ahead, knowing that it’s going to hear the usual “Good morning.” Even before you hear the rest of the sentence.

A Wrong Guess

Except, it turns out that that’s not what they say. They say, “Good mattress you got in there!” Your brain has decided ahead of time what it’s going to hear – up until the “… attress you got there!” part. Suddenly your brain has to go back and actually listen to what was said. You’ve got some audio memory, so it remembers what it heard, it just wasn’t planning on paying attention to it – since it had already decided what it was going to hear. When it didn’t hear that, then it had to go back to the “m” and figure out what came next. That actually takes some time for your brain to do – your response is likely to be slightly delayed.

Your brain did what’s called speculationDone by a processor, it means that the processor has to guess ahead of time what the result of a test will be so that it can load those instructions into the pipeline. If it’s wrong, it will have to empty the pipeline and restart with the correct instructions. in the computer world (ok, and also in the real world). And some processorsA computer chip that does computing work for a computer. It may do general work (like in your home computer) or it may do specialized work (like some of the processors in your smartphone). act pretty much like your brain when doing this.

Here’s how that works. Remember those pipelines? You have to fill them up to get things going efficiently. It takes a while for the first instruction to get through – especially with a long pipeline – but, after that, they come quickly because the pipeline keeps feeding results in quick succession.

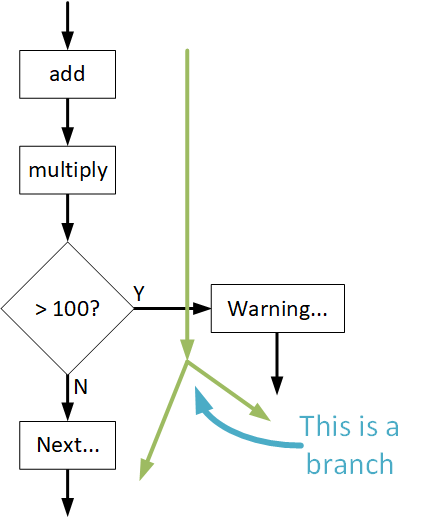

But it’s not always so straightforward. Let’s look at some fake code (I don’t want to get caught up in technicalities about any particular programming language; they’re not the point).

add a to b and store the result as x

multiply x by 3

if the result is bigger than 100, then start a routine to send a warning

if not, then proceed to the next instruction

Here we know that 3 things will definitely happen: the first instruction, the second instruction, and then a test to see whether a value is bigger than 100. But what happens after that? We don’t know; it depends on the result of the test.

Branches Create a Challenge

When we start, we fill the pipeline with the “add” instruction. Heck, since this is presumably in the middle of some program, the pipeline may already be full, and we’re just putting the “add” instruction into the pipeline. Then the “multiply” instruction goes into the pipeline next. And then the “test” goes into the pipeline. So far so good.

But now we’re beyond the test, and we need to put the next instruction into the pipeline. But we don’t know what that’s going to be because we don’t know the result of the test yet. Remember that, just because it’s in the pipeline doesn’t mean that an instruction has been carried out. It’s got all of those steps to go through before it’s done. If the pipeline is 16 stages long, then you have to wait 16 clock cycles until you get the result. So how do we keep the pipeline full if we need to keep feeding it instructions, only we won’t know the right instructions for another 12 cycles or so?

The test we’re looking at is called a branch. The code has been flowing along in a straight line, but now that path branches: we can go one way or we can go the other way. We just don’t know which branch we’re going to take until we get there.

Processors Can Guess Too

Faced with a branch like this, some processors do what your brain did when it heard “Good m…”: it guesses (or speculates) what the rest is going to be. So the processor picks one branch and keeps filling the pipeline. Which is great if it turns out that it picked the right branch; everything keeps humming right along.

But what happens if it picked the wrong branch? Now the pipeline is full of the wrong instructions, and, literally, you have to empty the pipeline and restart it with the right instructions. Just like your brain delayed your response to the “mattress” comment, this causes a small delay in the processor, since now the right instructions have to make their way through the pipeline to get things going again.

This is called “branch mis-prediction,” and it’s more likely to be an issue in high-performance processors (i.e., big ones) both because they have longer pipelines and because they’re more likely to do speculation. This is somewhat compensated by the fact that those processors have really fast clocks, so they can catch up again pretty quickly. And, realistically for us, we don’t notice those delays, although, if done too much, they can slow down results noticeably.

So why are we talking about it? Isn’t it an obscure detail? Well, yeah, kinda. But for those of you curious about how things work, I thought it would be interesting – especially since there are real-world analogies (like your brain). But, more importantly, it’s also an example of something that affects one size of processor (big) more than the other (little).

Leave a Reply