[From the last episode: We saw how virtual memoryA scheme for translating virtual addresses, created by a compiler, into the physical addresses representing where things are located in a real system. helps resolve the differences between where a compilerA software development tool that takes a high-level program (source code) and translates it into a low-level machine-language program (object code). thinks things will go in memory and the real memories in a real system.]

We’ve talked a lot about memory – different kinds of memory, cache memory, heap memory, virtual memory. Now that we have the basics covered, let’s look at the bigger picture: What kinds of systemsThis is a very generic term for any collection of components that, all together, can do something. Systems can be built from subsystems. Examples are your cell phone; your computer; the radio in your car; anything that seems like a "whole." use what, and where will we find those systems?

We’re going to start with the simplest systems and work up from there. And the edgeThis term is slightly confusing because, in different contexts, in means slightly different things. In general, when talking about networks, it refers to that part of the network where devices (computers, printers, etc.) are connected. That's in contrast to the core, which is the middle of the network where lots of traffic gets moved around. is where the simplest systems reside. Note that, in all of these drawings, I’m focusing on where programs are stored. So all of the arrows point in towards the CPUStands for "central processing unit." Basically, it's a microprocessor - the main one in the computer. Things get a bit more complicated because, these days, there may be more than one microprocessor. But you can safely think of all of them together as the CPU. since the CPU reads the programs rather than writing them. Of course, we may need to store data too; we can also accomplish that using the following schemes, although it’s likely to be easier with the more complex schemes.

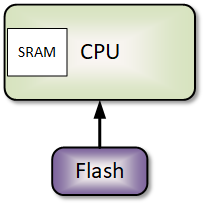

Cheap, Small, Low-Power, Slowish: Edge

Let’s say we have a super-small system that doesn’t have to do much, and, what it has to do doesn’t have to be done quickly. In this situation, we can store the softwareIn this context, "software" refers to functions in an IoT device that are implemented by running instructions through some kind of processor. It's distinct from "hardware," where functions are built into a silicon chip or some other component. in flash memoryA type of memory that can retain its contents even with the power off. Thumb drives use flash memory. – and, even though that memory is pretty slow, it’s fast enough that the program can run directly out of the flash memory. No need to move it into DRAMStands for "dynamic random access memory." This is temporary working memory in a computer. When the goes off, the memory contents are lost. It's not super fast, but it's very cheap, so there's lots of it. first. Such a system probably doesn’t even have any DRAM. A setup where the program is run directly from flash is referred to as execute-in-place (or XIP), since the code doesn’t have to be moved first. The CPU will have access to some internal SRAMStands for "static random access memory." This is also temporary memory in a computer. It's very fast compared to other kinds of memory, but it's also very expensive and burns a lot of energy, so you don't have nearly so much. as working memory. This will be an edge device.

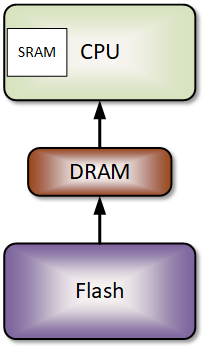

Cheap, Small, Low-Power, Not Quite so Slow: Edge

This is similar to the prior one, only it needs to go faster than is possible executing directly out of flash. So now we need some DRAM to load the code into so that it can run faster. We’ll assume for now that, while it needs to run faster than flash allows, it doesn’t need to run faster than DRAM allows. This would still be an edge device.

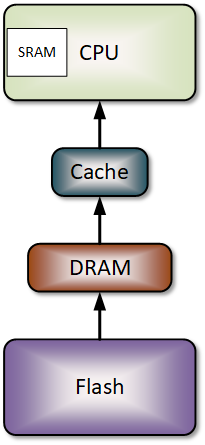

Moderate Cost, Lowish Power, Faster Yet: Edge

The step we take here is the same as the step we just took in the last one: the memory isn’t fast enough by itself, so we make it faster. In this case, that means using cacheA place to park data coming from slow memory so that you can access it quickly. It's built with SRAM memory, but it's organized so that you can figure out which parts haven't been used in a while so that they can be replaced with new cached items. Also, if you make a change to cached data, that change has to make its way back into the original slow storage. to speed up the DRAM access. This is still likely to be an edge device – the fastest of them.

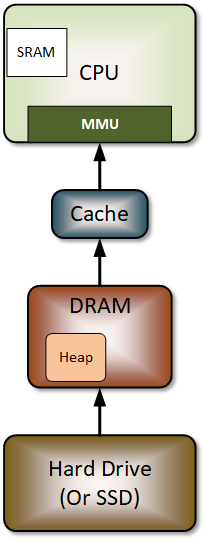

Fast, Big, Cost Is Secondary: the Cloud

Once we’re in the cloudA generic phrase referring to large numbers of computers located somewhere far away and accessed over the internet. For the IoT, computing may be local, done in the same system or building, or in the cloud, with data shipped up to the cloud and then the result shipped back down., powerThe rate of energy consumption. For electricity, it’s measured in watts (W). is less of an issue. It’s important – data centersA collection of computers that are interconnected so that they can share and distribute work. For our purposes, it’s the same as a computer farm, but the focus is on its application for processing data. use a TON of energy – but it’s not like a battery is going to go dead. The CPU is going to be faster and more complex, and we will store programs on a hard-drive or SSDA memory that acts like a hard drive, but is built out of flash memory instead.. Because many programs are likely to be running at the same time (unlike in most edge devices), it has an MMUCircuitry that keeps track of what’s been loaded from a hard drive into DRAM and translates virtual addresses into physical addresses. so that it can utilize virtualIn our context, refers to what are usually software models of some physical thing. The model behaves like the real thing except, in some cases, in performance. memory for managing where everything is in DRAM.

While my focus has been on where programs are stored, this is also the configuration that’s most likely to use heap memoryA block of working memory reserved for use by programs as they request an allocation. The programs request and release memory from the heap as they go, and where specific data will end up in the heap is unpredictable. within DRAM.

Hopefully this helps you see where each of the different memory notions we’ve discussed fits into the grand scheme of things. Now… there’s one more aspect to all of this: what if there were more than one CPU in the system. Could you do more work then? How would these diagrams change? We’re going to head down that path next.

Leave a Reply