[From the last episode: We saw how engineers organize working memory in computing systemsThis is a very generic term for any collection of components that, all together, can do something. Systems can be built from subsystems. Examples are your cell phone; your computer; the radio in your car; anything that seems like a "whole.".]

We’ve seen how heap memoryA block of working memory reserved for use by programs as they request an allocation. The programs request and release memory from the heap as they go, and where specific data will end up in the heap is unpredictable. can provide a flexible way of allocation chunks of memory on-the-go. The chunks aren’t planned ahead of time; it’s a real-time thing: when the program, for whatever reason, needs more memory, then the operating systemSoftware – or firmware – that handles the low-level aspects of a computer or IoT device. It gets direct access to all the resources. Software asks the OS for access to those resources. finds an available chunk and allocates that chunk to the program. The program can use it until it’s done with that chunk, at which time it releases the chunk for later use by the same or a different program.

Seems pretty straightforward. Except for one glitch: the released chunks end up looking like holes in the memory. If some new allocation request is for a chunk that’s the same size or smaller than the holeIn a material with specific places where electrons should be (like silicon), if an electron moves out of its designated spot, what’s left is called a hole. A hole effectively has a positive charge, and, as electrons move from hole to hole, it looks like the hole moves (even though, strictly speaking, it doesn’t – it just gets filled or emptied by a moving electron)., it works: the operation system can (at least in theory) simply reallocate that hole to the new request. But what happens if you’ve been using the memory for a while, allocating and releasing chunks, and you “use up” the memory – except for those holes? And now you need a chunk bigger than any of the holes?

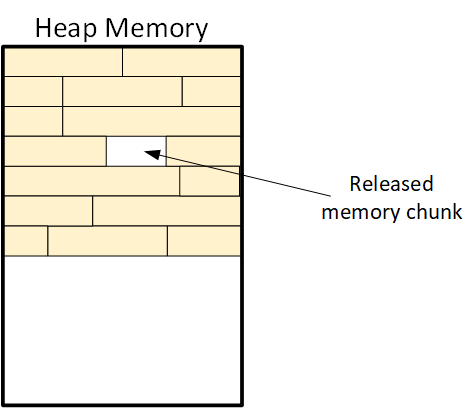

Let’s look at a specific example – a simple one first. Let’s say we’ve been busy allocating memory, and then we’re done with a small chunk, so we release it. The memory then looks like this:

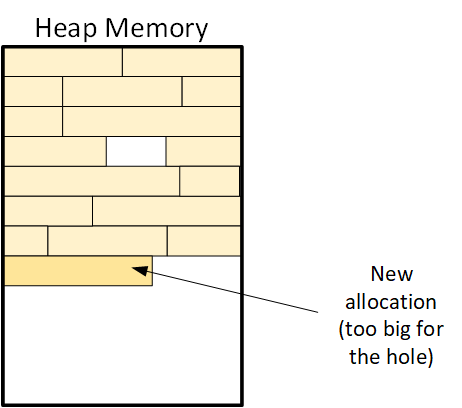

Now, let’s say we need to allocate a new chunk of memory. If the new chunk is small enough, we could fit it into the hole. But what if it isn’t? Well, we have to allocate it from the unused portion of the memory, leaving the hole in place.

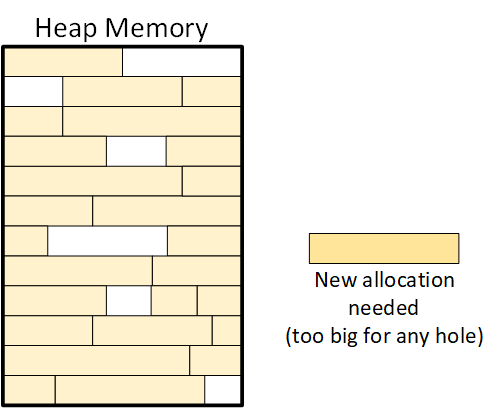

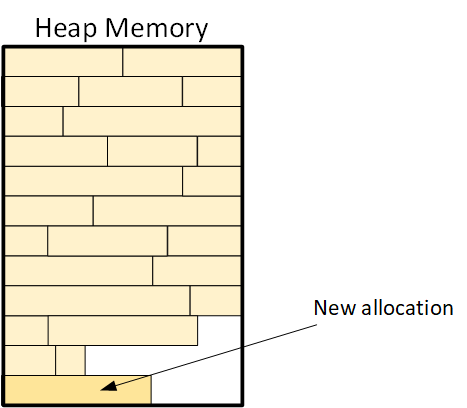

That works this time, but what happens if the unused part of memory goes away – we’ve filled it up – except for the holes that have appeared where chunks were released? The memory may look something like this:

Now what? There’s enough free memory for the new allocation, but the problem is that it’s all broken up all over the place. Said another way, it’s fragmented. We really don’t want to break up the allocation and spread it over multiple holes. That would use more memory (for managing where all the pieces are), and it would slow things down.

In fact, that’s exactly what happens with hard drives. Remember defragmenting your drive? That was a similar problem: files were getting broken into multiple fragments, and it slows down performance. In this case, we don’t want to break things up for the same reason.

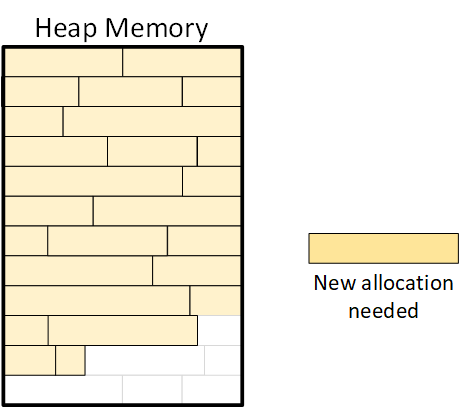

So we’re left with the problem: how do we allocate that new chunk? We first need to reorganize the memory and move things around to get all of those holes together into a larger chunk of available memory. That means closing up the holes and “pushing” the holes to the end of the memory where they can be reused. (The gray lines at the bottom of the memory below indicate the free chunks that got relocated down there.)

Now we have free memory that’s big enough to accommodate the new chunk we need.

That process of moving things around to bring the free memory chunks together is sometimes referred to as garbage collectionThe process of defragmenting memory by moving multiple free “holes” in memory together so that they can be allocated more effectively.. And yeah, it takes some time to do. It’s also hard to predict when it will be needed, since it all depends on who needs memory and releases memory at what time. The process is fast enough to where you may not notice it, but it can make a difference.

For example, in an industrial IoTThe Internet of Things. A broad term covering many different applications where "things" are interconnected through the internet. setting, a motor might be connected to a sensorA device that can measure something about its environment. Examples are movement, light, color, moisture, pressure, and many more. that detects whether the motor is getting too hot. If so, perhaps it slows the motor down. But to avoid damage, you want the response to the overheating to be very fast – fast enough that, if, out of bad luck, you couldn’t get it done without doing garbage collection first, that might take too long. So applications like this that rely on quick response would do better with fixed memory locations than using heap memory.

The following video illustrates the process of allocating, freeing, and garbage-collecting heap memory.

Leave a Reply