[From the last episode: We started this new thread with a broad introduction to machine learningMachine learning (or ML) is a process by which machines can be trained to perform tasks that required humans before. It's based on analysis of lots of data, and it might affect how some IoT devices work..]

While neuromorphicThis refers to systems that attempt to operate in the same way that the brain operates. Spiking neural networks are the main commercial example. neural networksA type of conceptual network organized in a manner inspired by our evolving understanding of how the brain works. – that is, ones that work the way our brains work – may still be off in the future a ways, someone came up with a different way to emulateRefers to one kind of system that can behave as if it were another kind. A good example is a Macintosh, which, by itself, works very different from an Intel/Microsoft-based PC. But a Mac can pretend to be a PC by emulating how a PC works. those networksA collection of items like computers, printers, phones, and other electronic items that are connected together by switches and routers. A network allows the connected devices to talk to each other electronically. The internet is an example of an extremely large network. Your home network, if you have one, is an example of a small local network. – even though they don’t work the same way. These networks are generically referred to as artificial neural networksA type of neural network that’s loosely inspired by biological neurons, but operates very differently., or ANNs. The new approach got its start in the 70s, but it took so much computing that the machinesIn our context, a machine is anything that isn't human (or living). That includes electronic equipment like computers and phones. of the day couldn’t handle it. More powerful computing chipsAn electronic device made on a piece of silicon. These days, it could also involve a mechanical chip, but, to the outside world, everything looks electronic. The chip is usually in some kind of package; that package might contain multiple chips. "Integrated circuit," and "IC" mean the same thing, but refer only to electronic chips, not mechanical chips. and some other ideas that reduced the amount of required computing brought life back to the field in the 90s, and now this approach is all the rage.

The thing about it is that it’s all math. It’s all abstract. That’s both the power and the weakness of it. To identify a dog, you can show an ANN a series of pictures, and it will pick out the features that make a dog a dog – if the set of pictures it gets contain lots of different dogs in lots of different conditions. There’s no magic here: if all the system sees is golden retrievers as examples, then the only dogs it will recognize will be – surprise! – golden retrievers.

You may sometimes hear about facial recognitionIn the context of machine vision, a task that identifies not just what kind of thing is in the image, but which specific individual it is. softwareIn this context, "software" refers to functions in an IoT device that are implemented by running instructions through some kind of processor. It's distinct from "hardware," where functions are built into a silicon chip or some other component. that doesn’t work well on all people. That’s usually a result of too many of one group of people in the sample set and not enough of others – something that’s referred to as bias. It might not be a surprise that these systemsThis is a very generic term for any collection of components that, all together, can do something. Systems can be built from subsystems. Examples are your cell phone; your computer; the radio in your car; anything that seems like a "whole." tend to learn more about Caucasion people than other minorities in the US, explaining why the systems don’t always work as accurately for other groups.

ANN Structure

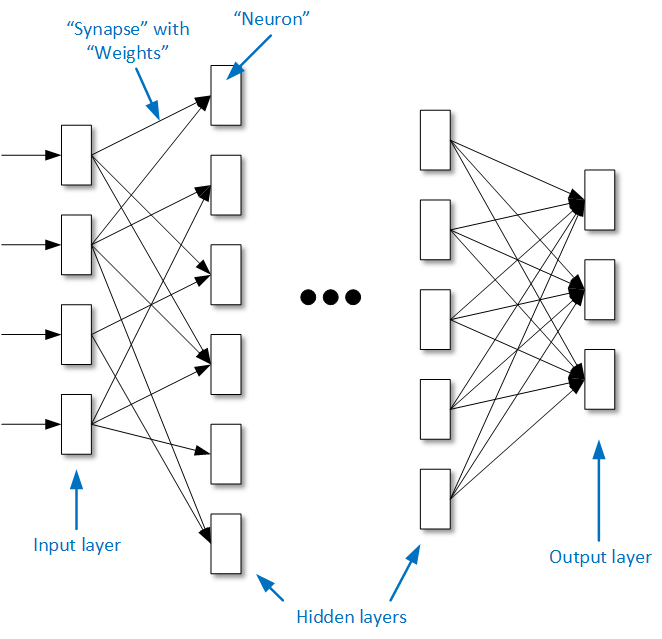

Neural nets started out as very simple structures, but have become anything but in the last several years. These networks are organized in layers, where each layer accomplishes something and passes that result on to the next layer. The first ANNs had a single layer. Today’s ANNs can have hundreds of layers.

Because these bigger modelsA simplified representation of something real. We can create models of things in our heads without even realizing we're doing it. Technology often involves models because they let us simplify what would otherwise be extremely detailed, complicated concepts by focusing only on essential elements. were initially viewed as being “deeper” than a single-layer model, we called them deep neural networks, or DNNs. Many folks talk about DNNs as if they’re fundamentally different from other kinds of neural networks. In fact, they’re not. They simply have more layers than the others – which does make them able to do more.

The original ANNs had only input and output layers. The “depth” was achieved by adding “hidden” layers in the middle. Each “box” in a layer is referred to as a neuron. The neurons are fed by signals from earlier layers (or from the inputs at the beginning), and there’s some math that we’ll talk about later that acts as what’s called the synapse. These names are convenient, but what they do can’t be compared to their real-life counterparts.

Building a Network

Engineers have worked hard developing various network structures, and the ones that seem to work well have become popular. You may hear names like ResNet50 or MobileNet or YOLO: these are names for different structures that are good for different applications, and they may have their own strengths in terms of requiring more or less computation or memory or powerThe rate of energy consumption. For electricity, it’s measured in watts (W). to run. Some people develop their own custom networks; far more leverage these well-known ones.

You’ll see above that each neuron has one or more synapses, and each synapse is associated with a weight (more generally called a parameter). It’s the weights that cause the need for all of the math. In a really general way, you could think of the weights as saying which of the inputs (or prior layer’s signals) are the most important ones.

These are the elements of any of the many ANNs in use today. They’ve been remarkably successful, although they still require an extreme amount of computing – often in the data centerA collection of computers that are interconnected so that they can share and distribute work. For our purposes, it’s the same as a computer farm, but the focus is on its application for processing data. (although that’s changing). And the energy consumed for all the computing is an ongoing problem to solve.

Next we’ll look at the high-level notions of teaching these models and then using them.

Leave a Reply