[From the last episode: We saw how a problem might be solved by splitting the work up and distributing it over multiple computers.]

Distributed computingA way of breaking up calculations and letting more than one computer work on different pieces at the same time in order to speed up the solution. can be a useful way of solving hard problems. But there’s a limitation: all of that information has to be communicated between the computers to get the job done. And that can take time. Perhaps there’s a faster way?

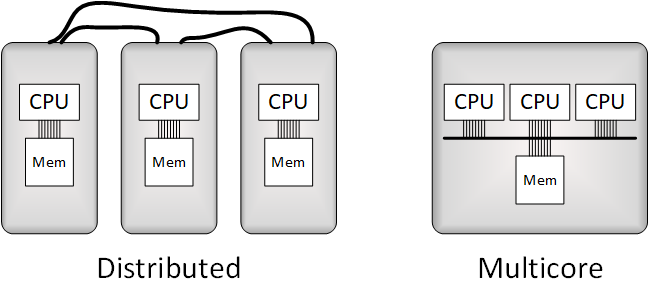

In many cases, yes, there is. Instead of using multiple computers, what if we put more than one CPUStands for "central processing unit." Basically, it's a microprocessor - the main one in the computer. Things get a bit more complicated because, these days, there may be more than one microprocessor. But you can safely think of all of them together as the CPU. inside one computer so that they can all run truly concurrently? This is now a thing, and it’s called multicore (or multi-coreDescribes a computer chip that has more than one CPU on it.) computing. In this context, a coreThis can have more than one meaning. When discussing networks, the core is the heart of the network where much of the traffic (or at least that part that has to go a long ways to its destination) moves. This is in contrast to the edge -- the outer part of the network where devices like computers and printers get connected. When discussing computers, you can think of it as the same as a CPU. is a CPU.

Now… if a CPU is the heart of a computer, then how is this any different from distributed computing, which, of course, uses multiple CPUs that just happen to reside in different boxes?

There are (at least) two major differences: connections and memory.

A Fat Connection

You might think of a CPU as its own chip, and so, in this case, it might seem like we’re putting multiple chipsAn electronic device made on a piece of silicon. These days, it could also involve a mechanical chip, but, to the outside world, everything looks electronic. The chip is usually in some kind of package; that package might contain multiple chips. "Integrated circuit," and "IC" mean the same thing, but refer only to electronic chips, not mechanical chips. in a box. We could do that, but that’s not usually how it happens. Instead, the folks that make the CPUs put multiple CPUs on a single chip. The chip that’s powering your computer might have two or even four CPUs in it. And some specialized chips may have many more CPUs than that (it’s just that you and I usually don’t need that for everyday computing).

You may remember that an important part of distributed computing is the connection between the boxes so that the problem can be sent over and the result can be sent back. Well, when you’re connecting two boxes, you’re using regular wire. And there are only so many wires you can realistically run between the systemsThis is a very generic term for any collection of components that, all together, can do something. Systems can be built from subsystems. Examples are your cell phone; your computer; the radio in your car; anything that seems like a "whole.". And the wires are long by computer standards, so the smallish number of wires you have are also slowish.

Inside a single chip, you’re no longer using regular wires. Instead, we make connections inside the chip by printing really narrow metal lines on top of the siliconAn element (number 14 in the periodic table) that can be a semiconductor, making it the material of preference for circuits and micro-mechanical devices.. While regular wires might look thin, they’re huge when compared to the metal lines on a chip. For perspective, a human hair is between 17 and 180 μm thick, while these printed metal lines have widths in the 10s of nanometers wide – between 100 and 1000 times smaller. You might have over 500 or 1000 lines connecting two cores on a chip; you’d need a really fat bundle of wires to do that between computers.

In addition, things are really close together on a single chip, so, not only are there more signals available, those signals are also much shorter – meaning that they go faster and that we can use faster communication techniques.

Shared Memory

Then there’s another consideration that’s completely separate from the connection: memory. There are different ways to organize multiple cores in a multicore chip, but the simplest one has all the cores looking at the same memory. That’s makes an enormous difference. Take our Excel spreadsheet example from last week. That spreadsheet is in memory. In order for another computer to help, you might literally have to move part of that spreadsheet to the memory of the other computer.

With multicore, there’s no such moving data needed. It’s all accessible by all of the cores. Yeah, if one core is reading something, you might have to wait a tick until another core can get to it, but that’s a really brief pause as compared to trucking all of that data from one computer to another.

It’s Not One or the Other – It’s All of the Above!

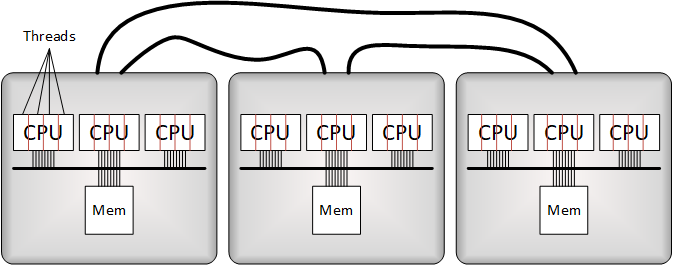

We started this line of discussion with threads, so remember also that each of these cores can have hardwareIn this context, "hardware" refers to functions in an IoT device that are built into a silicon chip or some other dedicated component. It's distinct from "software," which refers to instructions running on a processor. multi-threading. In addition, don’t think of multicore as being instead of distributed computing; you can do both. So you can take major chunks of a problem and ship them to different boxes, and each of those boxes can then use multiple cores to solve their pieces faster, and each of those cores can use multiple threads to be even more efficient.

Of course, in order for this all to work, programs have to be written in a way that takes advantage of this opportunity, and that’s not always easy. In the engineering world, there are lots of programs for hardware analysis and simulation that can take a really long time to run – like hours, days, or even weeks. Developers have completely rewritten many of those programs to take advantage of multiple threads, cores, and computers so that they can solve gigantic problems in a more reasonable timeframe.

Also, some problems naturally lend themselves to being broken up and solved in pieces; others, not so much. So there’s no real guarantee here. Luckily for you, you’re probably not having to write these programs, so our only goal here is that you understand what’s going on under the hood. There are also multiple ways to attack these problems, and, many years ago, I wrote a silly article for EE Journal where I used the making of apple pies in an Iron-Chef setting to illustrate this. You might have fun with it…

Leave a Reply