[From the last episode: We looked at the role of a hard driveA type of persistent (non-volatile) memory built from rotating platters and “read heads” that sense the data on the platters. and the need to bring chunks of code from the hard drive onto DRAMStands for "dynamic random access memory." This is temporary working memory in a computer. When the goes off, the memory contents are lost. It's not super fast, but it's very cheap, so there's lots of it..]

Last time, we saw that we may need to load code from the hard drive into DRAM, and that creates some confusion about what addresses the processor should use when trying to find some code – like a function being called. And we posed the question of how the processor deals with this confusion. That’s what we’ll talk about here.

What the Compiler Sees

First, let’s think about when the program is first being developed. The compilerA software development tool that takes a high-level program (source code) and translates it into a low-level machine-language program (object code). that we use to transform human-understandable things into computer-understandable things has to determine where to store the different parts of the program. This is important, for example, for calling functionsA small portion of a program that’s set aside and given a name. It helps isolate key code in an easy-to-understand way, and it makes changes easier and less error prone. It’s a really important way to make programs easier to understand. May also be called a subroutine.: such a call tells the processor to run the code located at a specific addressWhen referring to memory, a binary number that shows where, within some memory block, the data you want is located. If you want to read or change that data, you have to give the address so that the right data is read or changed., so there has to be a way of knowing what that address is. Part of the compiling process involves figuring out where all of those addresses will be.

But the compiler doesn’t know where the program is going to be stored in an actual systemThis is a very generic term for any collection of components that, all together, can do something. Systems can be built from subsystems. Examples are your cell phone; your computer; the radio in your car; anything that seems like a "whole.", and it also doesn’t know how much DRAM the system it runs on will have. That’s good, because it means that this program can run on lots of different computers with different amounts of memory. So it’s going to assume that the whole program is in one place, all together, and it will use that assumption to decide where the memory locations will be.

But what happens when the system moves pages of the program from the hard drive into DRAM? The operating systemSoftware – or firmware – that handles the low-level aspects of a computer or IoT device. It gets direct access to all the resources. Software asks the OS for access to those resources. (OS) comes to our assistance here, using a notion called virtual memoryA scheme for translating virtual addresses, created by a compiler, into the physical addresses representing where things are located in a real system.. The memory mappings that the compiler created won’t necessarily show where in physical DRAM the various things are located. So the compiled program is considered virtualIn our context, refers to what are usually software models of some physical thing. The model behaves like the real thing except, in some cases, in performance., not physical.

From the Virtual to the Physical

So, if the virtual addresses aren’t actually correct when the program is running, how does the program find the things it needs when it runs? This is the job for a memory management unitCircuitry that keeps track of what’s been loaded from a hard drive into DRAM and translates virtual addresses into physical addresses., or MMU. More complex processorsA computer chip that does computing work for a computer. It may do general work (like in your home computer) or it may do specialized work (like some of the processors in your smartphone). have these precisely so that they can handle this issue. The DRAM addresses are called physical addresses because that’s physically where the data resides when used. The addresses as compiled are virtual addresses. The virtual addresses won’t work if used by the program, because the program isn’t truly stored at those virtual addresses. It’s the MMU that takes a virtual addressThe location of some item as generated by a compiler. In more complex systems, it will likely need to be translated to a physical address by an MMU before it can be used. and translates it to the physical addressThe location of some item in physical memory. so that the program can correctly find the things it needs in DRAM.

So the processor will request, say, a function located at some virtual address. The MMU will intercept that request and look at the virtual address. One of two things will happen:

- If the virtual address lis on a pageA chunk of memory on a hard drive or SSD that can be brought into DRAM for use or for execution. that’s not yet in the DRAM, then the MMU directs the system to grab that page on the hard drive (or flash) and load it into DRAM (possibly kicking out some other page – like we saw with cacheA place to park data coming from slow memory so that you can access it quickly. It's built with SRAM memory, but it's organized so that you can figure out which parts haven't been used in a while so that they can be replaced with new cached items. Also, if you make a change to cached data, that change has to make its way back into the original slow storage.). The MMU makes note of the virtual address range of that page, and it makes note of the physical address where it stored the page in DRAM, and then it logs that information in a translation table. That table tells the MMU where in the DRAM some virtual address has been stashed. In other words, it translates virtual addresses to physical addresses.

- If the physical address corresponds to something that’s already in DRAM, then the MMU will translate the physical address into a virtual address based on the translation table. The code is then fetched from the DRAM.

A Virtual Example

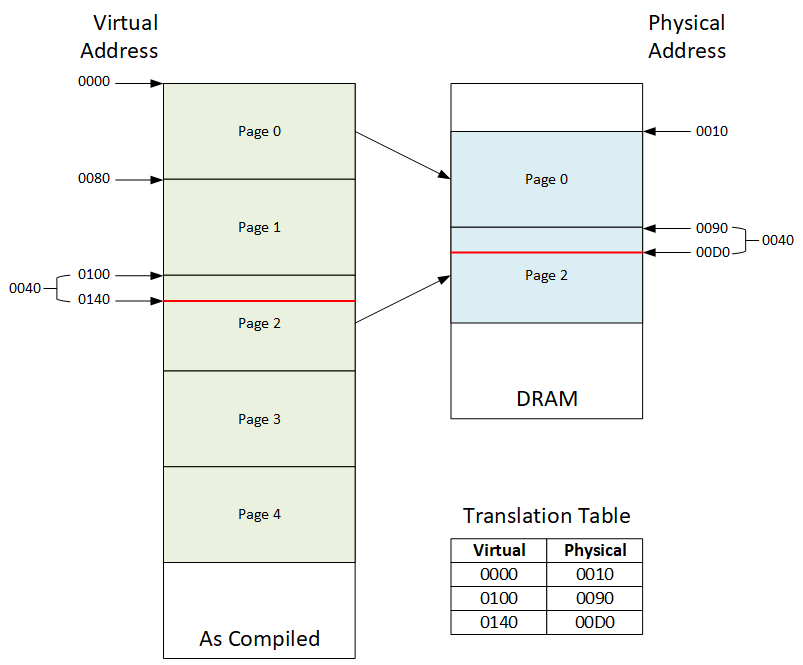

The diagram below illustrates this. Let’s say that the program starts running at virtual address 0000, so first that page has to be loaded into DRAM. As illustrated, it happens to start at physical address 0010. It continues along running code that is already in page 0, translating as needed, until it comes to a function call at address 0140. That’s not in page 0; it’s in page 2.

So now page 2 gets loaded into DRAM, in this case, right after page 0. This illustrates that, in DRAM, the pages may not be loaded in the same physical order as they are in the virtual version. Now the translation table shows page 2, which starts at virtual address 0100, being loaded at physical address 0090. Specifically, virtual address 0140 now shows up at physical address 00D0 (remembering hexadecimalA base-16 counting system (rather than the base-10 system we usually use). Digits can go from 0 to 9 and then A, B, C, D, E, and F. addresses).

In reality, the translation table might not show each and every address; it may simply show the ranges of the pages and then use offsets to the needed address. For instance, virtual address 0140 is 0040 away from the start of page 2 (virtual address 0100). So it’s more likely that the MMU will note that address 0140 is within the range of page 2 and then add that 0040 offset to the physical start of page 2, which is 0090, and that should give physical address 00D0.

This is how virtual memory works. It can be a bit mind-spinning until you’re used to it (even for engineers), so there’s no shame in reading this a couple times if necessary for it to make sense (or in asking questions in the comments). Next week, we’ll step back and review the entire memory system to pull it all together.

Leave a Reply